Scientists at the University of Texas at Dallas, in collaboration with an industry partner, have introduced a novel approach to enhance the security of quantum computers against adversarial attacks. Quantum computers, known for their ability to solve complex problems at an exponential rate when compared to classical computers, are increasingly being integrated into artificial intelligence (AI) applications such as those found in autonomous vehicles. However, just like traditional computers, quantum computers are susceptible to attacks.

The team’s solution, known as Quantum Noise Injection for Adversarial Defense (QNAD), focuses on countering attacks aimed at disrupting AI’s inference capabilities. Dr. Kanad Basu, an assistant professor of electrical and computer engineering at the Erik Jonsson School of Engineering and Computer Science, highlighted the potential risks associated with adversarial attacks on AI systems. He compared such attacks to placing a sticker over a stop sign, which could lead to an autonomous vehicle misinterpreting the sign and failing to stop. The goal of the QNAD approach is to bolster the security of quantum computer applications.

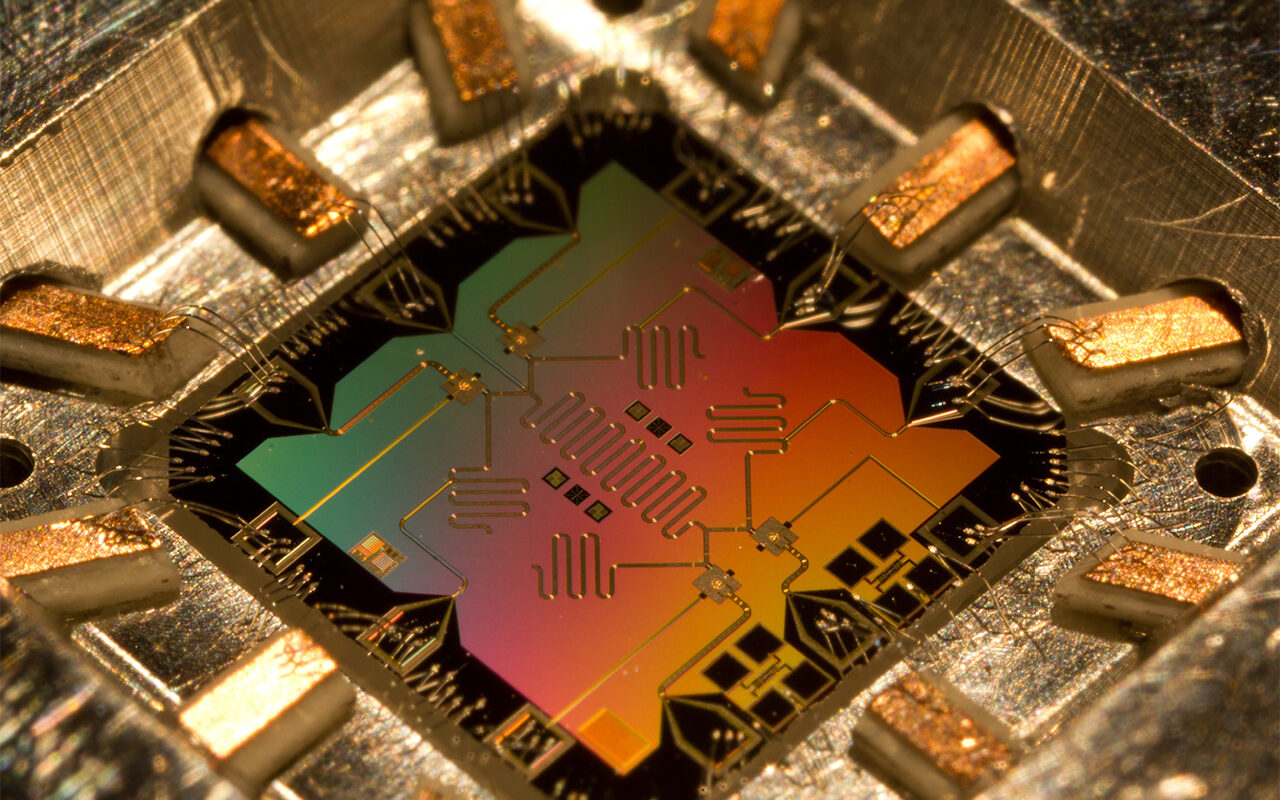

Presenting their research at the IEEE International Symposium on Hardware Oriented Security and Trust, the team showcased the effectiveness of their method in mitigating the impact of adversarial attacks. By leveraging quantum mechanics, quantum computers utilize qubits to represent information, offering significant computational advantages over classical computers. However, these systems are prone to noise and crosstalk, leading to errors in computing.

The researchers’ approach utilizes quantum noise and crosstalk to neutralize adversarial attacks. By incorporating crosstalk into the quantum neural network (QNN), which is essential for machine learning tasks, the method effectively enhances the security of quantum computers. The team demonstrated a significant improvement in the accuracy of AI applications using QNAD during attacks.

Shamik Kundu, a computer engineering doctoral student and co-author of the study, highlighted the complementary nature of the QNAD framework in protecting quantum computer security. Drawing an analogy to seat belts in cars, Kundu described how QNAD functions as a safeguard against adversarial attacks, reducing their impact on the QNN model akin to the role of a seat belt in minimizing the effects of a car crash.

In summary, the researchers’ innovative approach aims to fortify the resilience of quantum computers against malicious attacks, ultimately enhancing the security and reliability of AI applications in various domains.

*Note:

1. Source: Coherent Market Insights, Public sources, Desk research

2. We have leveraged AI tools to mine information and compile it